AI makes an ass out of you and me

Making the right assumptions is a big part of what we think intelligence is, but what do they mean without understanding?

One thing computers can’t do is make assumptions. At least until now.

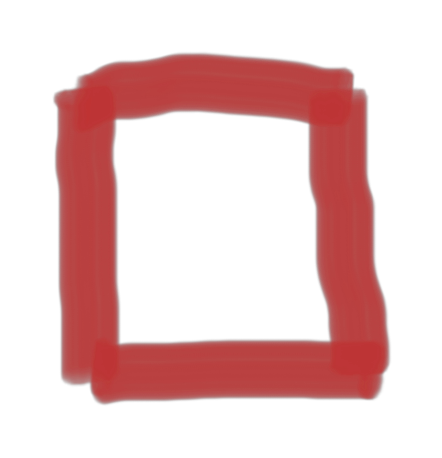

I want you to describe how to draw a square.

Maybe:

Draw four lines the same length that touch, two horizontally and two vertically.

Does that sound about right? If I were drawing the shape as you described it, I’d probably assume you were talking about a square:

However, following the exact same directions without assumptions, I might draw something completely different:

And that’s where a computer would be stuck. For a computer to follow the instructions and draw a square, you would have to be specific about the coordinates of each point.

Human communication is based on assumptions. Think of it is how we compress our messages in verbal format. The receiver(s) will be interpreting what you are saying by making assumptions and filling in gaps in the information you communicate. It's imperfect (and often how wars start) but it's what we have.

Computers can be programed to make specific assumptions—for example, if a human asks for four lines that touch, they are almost certainly talking about a square—but humans make countless assumptions every second of everyday based on a myriad of contextual clues, just to survive.

Making the right assumptions is a big part of what we think intelligence is. But Programming all human assumptions into digital media seemed as likely as brain transplant surgery. Instead “Artificial” Intelligence tried for decades to teach a computer how to make assumptions on what it knows and understands like a human might—in other words, to reason— but with little success.

The problem isn’t with computers, however; the problem is that humans make a lot of assumptions when it comes to communication. Humans don’t just follow the literal meaning of words being spoken; we are also calculating what the person communicating is actually thinking, something computers couldn’t do, because our assumptions are based on the synthesis of our physical experiences.

Making the right assumptions is a big part of what we think intelligence is. But Programming all human assumptions into digital media seemed as likely as brain transplant surgery: The realm of goofy speculative fiction.

Then came the Large Language Models (LLMs).

Imagine if you could view and remember every word ever written, every sound ever made, and every image ever recorded. You can then bring these up at anytime to see how human beings thought and responded to each other in all of these situations. And you could take how they responded and use that to make assumptions about what other human beings mean when they ask you something. Not just things like drawing squares but that when a human uses certain word patterns it means they are sad, so I should use word patters to help soothe them. Or maybe to take advantage of them?

The result is that computers have become much better at making assumptions about what humans mean in our own natural language. We can now get complex results from them without having to know a specific programing language. What computers have not done, despite popular appearance, is become better at understanding any of this information. It looks like it does, but, in fact, all it is doing is reflecting back with what humans understand in aggregate and interpreted by algorithms controlled by the computer's owners.

This is not Artificial Intelligence.

It is Assumptive Intelligence and it is making an ass out of you and me.